Vault and VSO: same cluster #

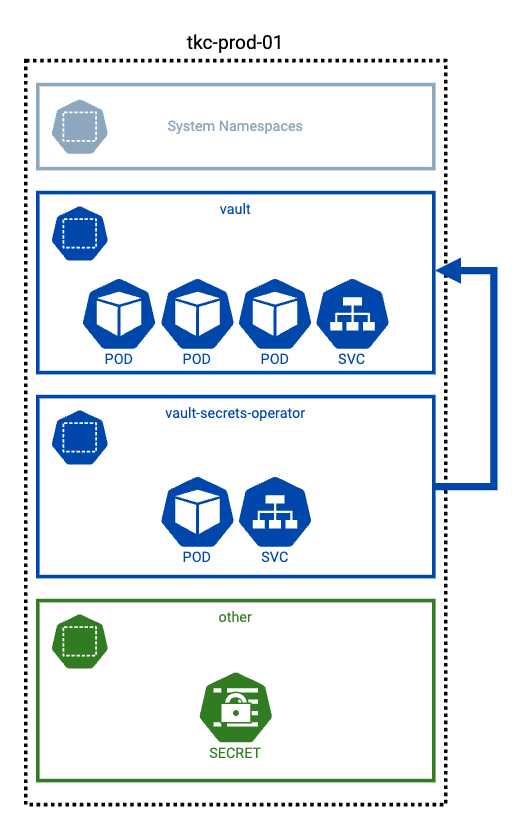

Let me pause and explain a bit about how things are set up in my “production” cluster.

My Vault instance has three pods deployed, along with a service. That service is used internally to the Kubernetes cluster to provide access to secrets, but it is also exposed via an external load balancer (Avi) so that I can administer Vault and external systems can access secrets too.

Inside a separate namespace, the Vault Secrets Operator (VSO) is deployed. Its function is to enable secrets that are held in Vault, where they should be, to be consumed as native Kubernetes secrets. It operates by watching for changes to its supported set of Custom Resource Definitions (CRD). Each CRD provides the specification required to allow the Operator to synchronize a Vault Secret to a Kubernetes Secret.

Thus, another application can request a secret from Vault via VSO. That secret will be delivered as a Kubernetes secret and updated when the secret is updated in Vault itself. Naturally, access to secrets in Vault can be managed through Vault’s policies, ensuring that only the secrets that should be consumable by the application are available.

Setup Vault #

Setting up Vault to accomplish this is pretty straightforward. Three vault commands can be issued to get the job done. But first, I created a couple of simple policies in Vault called “vso-credentials-read” and “vso-licenses-read”. Per the Vault documentation, the following then enabled a Kubernetes authentication mechanism called “vso” and enabled the namespaces “postgresql”, “harbor”, and “grafana” to request secrets.

KUBE_HOST=$(kubectl config view --raw --minify --flatten --output='jsonpath={.clusters[].cluster.server}')

vault auth enable -path vso kubernetes

vault write auth/vso/config \

kubernetes_host="$KUBE_HOST" \

disable_issuer_verification=true

vault write auth/vso/role/vault-secrets-operator \

bound_service_account_names=default \

bound_service_account_namespaces=postgresql,harbor,grafana \

policies=vso-credentials-read,vso-licenses-read \

audience=vault \

ttl=1h

The first command to get the value of KUBE_HOST is executed whilst using a kubeconfig for the production Kubernetes cluster (shown as “tkc-prod-01” in the Figure 1).

Install VSO #

Installing the Vault Secrets Operator (VSO) via helm is also straightforward:

helm install vault-secrets-operator hashicorp/vault-secrets-operator -n vault-secrets-operator -f values.yaml

The values.yaml file referenced is pretty short, but the important thing to note is that VSO is pointed directly at the service in the vault namespace. We can do that because VSO and Vault are in the same cluster.

defaultVaultConnection:

enabled: true

address: "https://vault.vault.svc.cluster.local:8200"

skipTLSVerify: true

controller:

manager:

globalTransformationOptions:

excludeRaw: true

clientCache:

persistenceModel: direct-encrypted

storageEncryption:

enabled: true

mount: vso

keyName: vso-client-cache

transitMount: demo-transit

kubernetes:

role: vault-secrets-operator

serviceAccount: svc-vault-secrets-operator

Consuming Secrets #

Consuming secrets from, for example, my postgresql namespace is pretty easy. The following two resources get the admin password for postgres from Vault and make it available in the postgresql namesapce as a Kubernetes secret called postgresql-db-pw:

apiVersion: secrets.hashicorp.com/v1beta1

kind: VaultAuth

metadata:

name: vso-auth

namespace: postgresql

spec:

method: kubernetes

mount: vso

kubernetes:

role: vault-secrets-operator

serviceAccount: default

audiences:

- vault

---

apiVersion: secrets.hashicorp.com/v1beta1

kind: VaultStaticSecret

metadata:

name: vso-postgresql-admin

namespace: postgresql

spec:

type: kv-v2

mount: credentials

path: services/production/postgres/postgresql

destination:

name: postgresql-db-pw

create: true

vaultAuthRef: vso-auth

refreshAfter: 30s

Vault and VSO: different clusters #

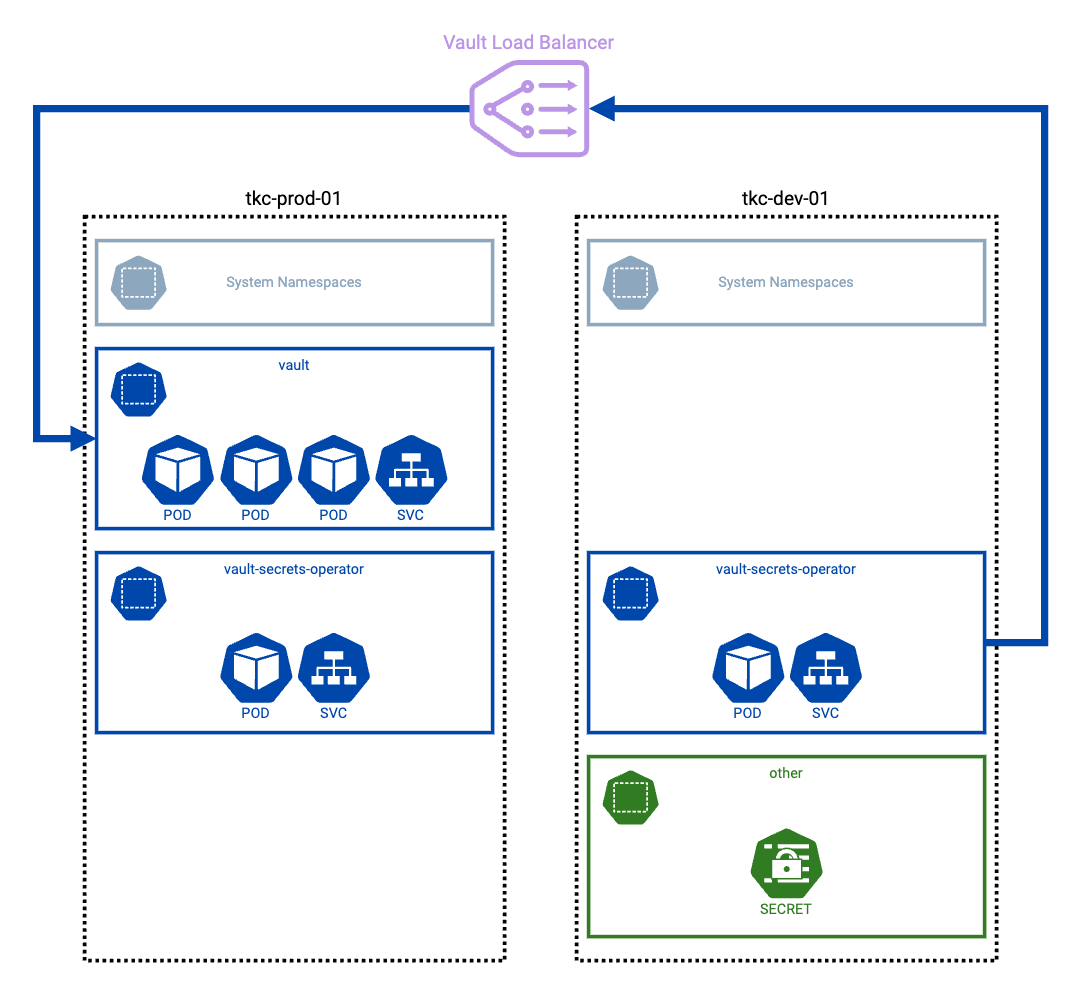

When Vault, VSO, and the applications that need to consume secrets are in the same cluster then things are pretty easy. It works well. However, when we’re using a different Kubernetes cluster do we really want to setup another Vault instance? Maybe, maybe not. I didn’t want to because I view my secrets as production data and it should live in one secure place.

What this means though is that there are some differences between the two scenarios.

In the development cluster we still have VSO deployed, but we don’t have Vault itself. Therefore the configuration of VSO must be slightly different. Additionally, the configuration of the auth method in Vault must be different because it is not in the same cluster.

Configure the VSO Namespace in Kubernetes #

Updated 27/11/2024: I forgot to add this section in the original post, but it was kindly pointed out to me recently.

To authenticate the Vault Secrets Operator in Vault when VSO is in another cluster, we need to create a service account in the Kubernetes cluster that we can use. The JSON Web Token (JWT) for the service account is needed in the next section to provide to Vault. To setup the service account etc, we can use the following YAML manifest:

apiVersion: v1

kind: Namespace

metadata:

name: vault-secrets-operator

labels:

pod-security.kubernetes.io/enforce: "privileged"

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: svc-vso-dev

---

apiVersion: v1

kind: Secret

metadata:

name: svc-vso-dev

annotations:

kubernetes.io/service-account.name: svc-vso-dev

type: kubernetes.io/service-account-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: role-tokenreview-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: svc-vso-dev

namespace: default

Setup Vault #

Some extra configuration values are required when configuring the auth method in Vault if VSO is running in a different cluster to Vault itself. Indeed, this will also work if Vault is running outside of Kubernetes altogether. The commands are similar to the other scenario, but subtly different.

TOKEN_REVIEW_JWT=$(kubectl get secret svc-vso-dev --output='go-template={{ .data.token }}' | base64 --decode)

KUBE_CA_CERT=$(kubectl config view --raw --minify --flatten --output='jsonpath={.clusters[].cluster.certificate-authority-data}' | base64 --decode)

KUBE_HOST=$(kubectl config view --raw --minify --flatten --output='jsonpath={.clusters[].cluster.server}')

vault auth enable -path vso-dev kubernetes

vault write auth/vso-dev/config \

token_reviewer_jwt="$TOKEN_REVIEW_JWT" \

kubernetes_host="$KUBE_HOST" \

kubernetes_ca_cert="$KUBE_CA_CERT" \

disable_issuer_verification=true

vault write auth/vso-dev/role/vault-secrets-operator \

bound_service_account_names=default \

bound_service_account_namespaces=postgresql \

policies=vso-dev-credentials-read,vso-dev-licenses-read \

ttl=1h

The first three commands to get the values of KUBE_HOST, KUBE_CA_CERT, and TOKEN_REVIEW_JWT are executed whilst using a kubeconfig for the development Kubernetes cluster (shown as “tkc-dev-01” in the Figure 2).

Install VSO #

Installing the Vault Secrets Operator (VSO) via helm is still straightforward:

helm install vault-secrets-operator hashicorp/vault-secrets-operator -n vault-secrets-operator -f values.yaml

However, the values.yaml file referenced is slightly different as VSO must be pointed at the external load balancer for Vault.

defaultVaultConnection:

enabled: true

address: "https://vault.lab.mpoore.io:8200"

skipTLSVerify: true

controller:

manager:

globalTransformationOptions:

excludeRaw: true

logging:

level: info

I also removed the client cache settings.

Consuming Secrets #

This works in the same way as before. The only difference being that a different auth mount has been used as I didn’t want development clusters pulling secrets for production applications after all!

apiVersion: secrets.hashicorp.com/v1beta1

kind: VaultAuth

metadata:

name: vso-auth

namespace: postgresql

spec:

method: kubernetes

mount: vso-dev

kubernetes:

role: vault-secrets-operator

serviceAccount: default

---

apiVersion: secrets.hashicorp.com/v1beta1

kind: VaultStaticSecret

metadata:

name: vso-postgresql-admin

namespace: postgresql

spec:

type: kv-v2

mount: credentials

path: services/development/postgres/postgresql

destination:

name: postgresql-db-pw

create: true

vaultAuthRef: vso-auth

refreshAfter: 30s

Summary #

This might not be the only way to use the Vault Secrets Operator across multiple Kubernetes clusters, but it worked for me and it could be automated easily enough if needed.