I recently moved my Homelab from one datacenter to another. During the time that it was in the first datacenter I had enabled the Workload Management feature in vSphere and deployed not only a Kubernetes Supervisor, but also a Kubernetes workload cluster as well.

In this cluster, I host a number of applications and services. HashiCorp Vault is present and provides not only a repository for my lab’s secrets, but PKI services as well. It hosts both a root certification authority (CA) and an issuing CA too. The certificates that it churns out on demand are used throughout my homelab.

Also in the cluster are some other applications such as Grafana, Harbor, Minio, Redis, Confluence etc. Understandably I need to look after these services and their data and configuration. So when it came to time for me to think about shutting the Kubernetes cluster down, I wanted to make sure that I wouldn’t suffer any data loss or have to redeploy everything.

Additionally, many of the application have their ingress managed by VMware NSX Advanced Load Balancer and the entire Supervisor and workload cluster sits atop a series of VxLAN segments managed by VMware NSX.

Documentation does exist for the procedure, but I wanted to test it out before I needed to do it in anger. So, based on that documentation I created my own runbook and added some steps and clarification.

Prerequisites #

- Make sure that all of the necessary DNS entries are correct.

- Make sure that all components are synchronised to a reliable time source (NTP).

- Check the expiry on any SSL certificates - you don’t want key management components with expired certificates to deal with.

- Check the expiry of all user and service accounts. This actually caught me out. Although I reset the NSX “admin” password, I missed that I was using it to permit NSX ALB to talk to NSX. It’s not a good practice and it did cause some havoc when I brought things back up again!

- Make sure to have administrative access to:

- vCenter (both the UI and “root” access to the console)

- NSX Manager

- NSX ALB Manager

- All ESXi hosts (you need to login to each)

- Backup your data.

- Backup your VMs.

Process #

Stop vSphere Cluster Services #

- Login to vCenter.

- Select the vCenter object in the Hosts and Clusters inventory view.

- Select the Configure tab.

- Select Advanced Settings.

- Click Edit Settings.

- Locate or create the

config.vcls.clusters.domain-c(*number*).enabledproperty. - Set the value to

false. - Save the changes.

The vCLS VMs will be stopped and deleted. We do this to prevent VMs from moving around because of vMotion and prevent any other cluster changes from taking place before vCenter itself is shutdown.

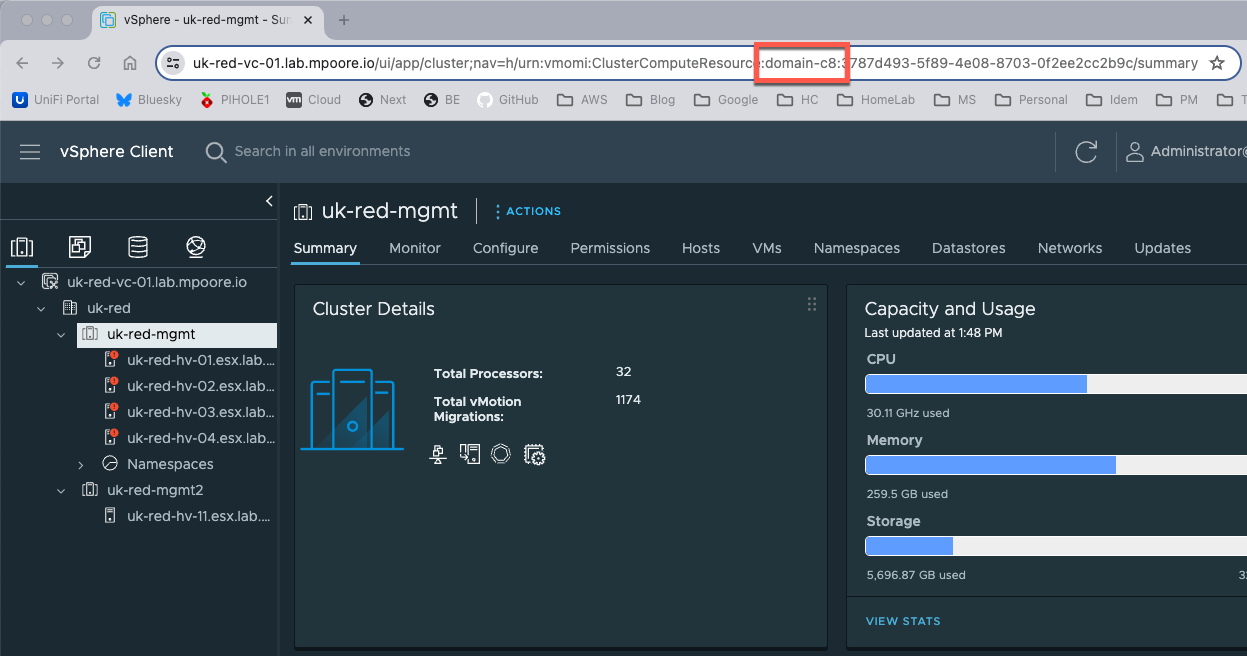

The domain number in the property in step 6 can be found by examining the browser URL when viewing the vSphere cluster in the UI. In this case the number is 8, making the property name config.vcls.clusters.domain-c8.enabled

Stop the vCenter Kubernetes Service #

- SSH into the vCenter appliance as the root user.

- Stop the Kubernetes service using the command:

vmon-cli -k wcp - Verify the service status using the command:

vmon-cli -s wcp

The output should show RunState: STOPPED

Shutdown the vCenter server #

- Login to vCenter.

- Locate the vCenter VM in the inventory.

- Right click, select Power > Shutdown Guest OS.

With vCenter shutdown, any further actions must take place directly on the ESXi hosts themselves.

Shutdown the Supervisor VMs #

- Connect to each ESXi host and login as the root user.

- Repeat for each of the “SupervisorControlPlaneVM” virtual machines:

- Select Actions > Guest OS > Shut down.

- Confirm the operation.

- Wait until the VM is completely powered off before proceeding to the next.

Shutdown the Workload Cluster VMs #

- Connect to each ESXi host and login as the root user.

- Repeat for each of the Kubernetes cluster control plane virtual machines:

- Select Actions > Guest OS > Shut down.

- Confirm the operation.

- Wait until the VM is completely powered off before proceeding to the next.

- Repeat for each of the Kubernetes cluster worker node virtual machines:

- Select Actions > Guest OS > Shut down.

- Confirm the operation.

- Wait until the VM is completely powered off before proceeding to the next.

The reason that the VMs are shutdown in this order is that if we started with the worker nodes first, the control plane nodes would try to spin up new workers.

Shutdown Virtual Machines on NSX networks #

If ALB Service Engines (SEs) are present on NSX networks then the ALB controller should be shutdown first.

- Connect to each ESXi host and login as the root user.

- Repeat for each virtual machine on an NSX network:

- Select Actions > Guest OS > Shut down.

- Confirm the operation.

Note: It may be possible for simultaneous shutdowns to be in effect depending on the workloads and applications in question.

Shutdown NSX Edge Nodes #

- Connect to the ESXi hosts running NSX Edge nodes and login as the root user.

- Repeat for each of the NSX Edge node virtual machines:

- Select Actions > Guest OS > Shut down.

- Confirm the operation.

- Wait until the VM is completely powered off before proceeding to the next.

Shutdown NSX Manager Node(s) #

- Connect to the ESXi hosts running NSX Manager nodes and login as the root user.

- Repeat for each of the NSX Manager node virtual machines:

- Select Actions > Guest OS > Shut down.

- Confirm the operation.

- Wait until the VM is completely powered off before proceeding to the next.

Shutdown ALL remaining Virtual Machines #

- Connect to each ESXi host and login as the root user.

- Repeat for each virtual machine on an NSX network:

- Select Actions > Guest OS > Shut down.

- Confirm the operation.

Note: It may be possible for simultaneous shutdowns to be in effect depending on the workloads and applications in question.

In my Homelab, the Active Directory servers (that provide DNS) and the NTP servers were the last things to go down.

Prepare vSAN for Shutdown #

See VMware documentation for details. I don’t actually use vSAN in my Homelab, but if you do then this is where you’d handle it.

Place ESXi hosts into Maintenance Mode #

- Connect to each ESXi host via SSH.

- Execute the command below to place the host into Maintenance Mode:

esxcli system maintenanceMode set -e true -m noAction

This may seem like overkill, but some VMs (like NSX Edge nodes) are configured to startup automatically. As I was moving datacenters I wanted an opportunity to check networking and storage access before powering anything virtual on.

Shutdown ESXi hosts #

- Connect to each ESXi host and login as the root user.

- Repeat for each host:

- Right click on Host in the navigation pane.

- Select Shut down.

- Click the SHUT DOWN button to complete.

Summary #

That was my runbook. It worked well in testing and very well on the day. Fortunately the startup process is a little easier, but that’ll be saved for another post.