It was partly a change of names that prompted me to write this article. But it’s also to reflect a slight change in my focus this year and to help me cement certain things in my mind.

VMware was producing Kubernetes and container-based solutions long before the Broadcom acquisition. The names of these solutions, their architectures and their capabilities have not made it easy to follow along at times. I believe that two of the recent changes address that.

vSphere Supervisor #

It was once called “vSphere with Tanzu”. It was briefly called “vSphere IaaS control plane”. Now though it’s called “vSphere Supervisor”.

The confusing thing about the name “Tanzu” was that it had multiple uses and it was never clear what was being referenced. I was working at KubeCon in Paris this year and so many people would approach the VMware booth and start by saying something like “I have VMware Tanzu…”. We’d have to stop them and clarify what they were actually talking about and whether it was:

- VMware Tanzu Application Platform

- VMware Tanzu Application Services

- VMware Tanzu Hub

- VMware Tanzu Data Services

- VMware vSphere with Tanzu (i.e. vSphere with Kubernetes)

Most of the time it was the latter, but we had to check.

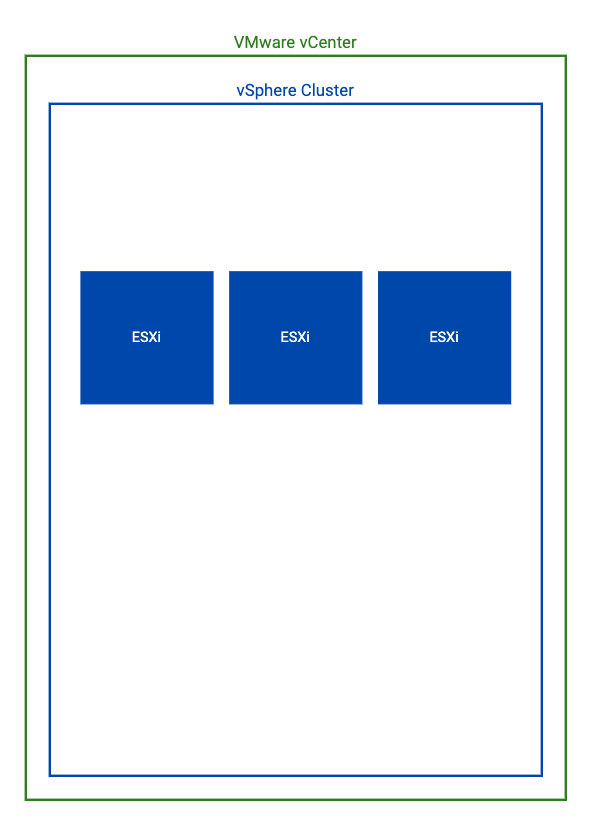

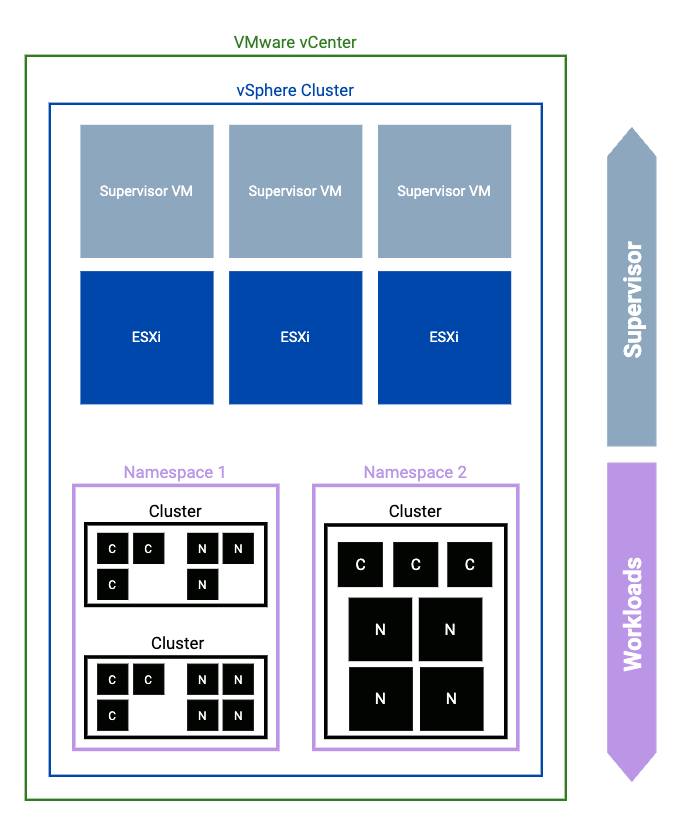

The simplest description of the vSphere Supervisor is that it is a Kubernetes cluster. It has a control plane and it has worker nodes. However, a Supervisor cluster is managed by and deployed by VMware vCenter. Let’s start with something that should be familiar to anyone who has used vSphere before - a vSphere cluster

Hopefully it doesn’t require too much explanation, but in Figure 1 you can see a VMware vCenter instance that contains a vSphere cluster that is formed of three ESXi hosts. Don’t worry for now about where the vCenter instance is located, just know that the logical and physical elements shown are there.

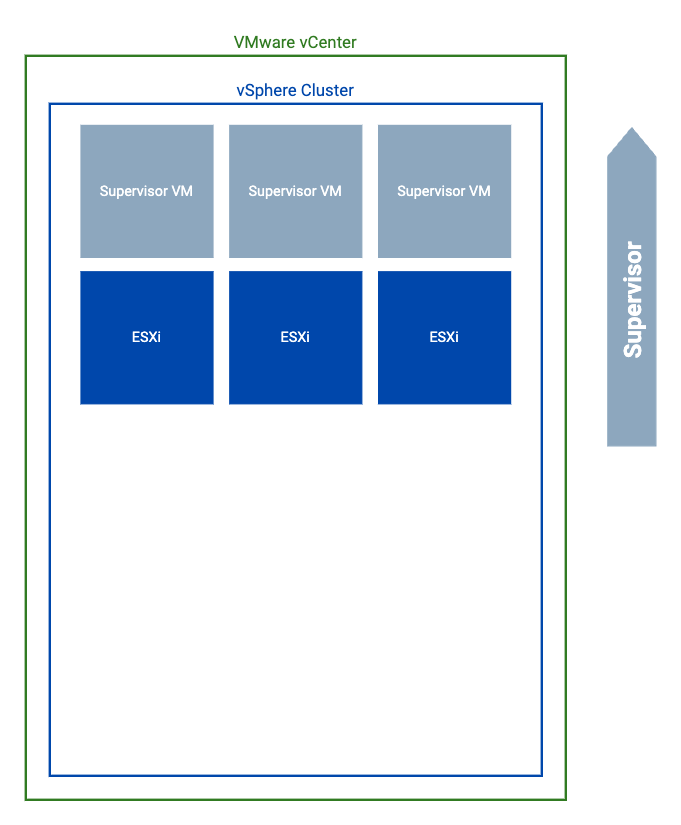

To create a vSphere Supervisor, vCenter deploys three control plane nodes into the cluster. These are virtual machines (VMs) and they form the start of a Kubernetes cluster that contains a number of system level applications that interact with vCenter.

Of course, a Kubernetes cluster requires worker nodes. They’re right there on the diagram in Figure 2 already. Yes, the ESXi hosts are actually the worker nodes in the Supervisor. When creating (or updating) a Supervisor, some additional components are deployed onto each ESXi host in the cluster to make it a worker node.

If you were to connect to your Supervisor and request a list of nodes…

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

42340816e8d2b09ab65f8c5f955450ab Ready control-plane,master 19d v1.29.7+vmware.wcp.1

42342dfaeb9d3081dd7c87a20968bb7f Ready control-plane,master 19d v1.29.7+vmware.wcp.1

4234804d4fe338bae8d41465e59770f7 Ready control-plane,master 19d v1.29.7+vmware.wcp.1

uk-red-hv-01 Ready agent 19d v1.29.3-sph-c8e42be

uk-red-hv-02 Ready agent 19d v1.29.3-sph-c8e42be

uk-red-hv-03 Ready agent 19d v1.29.3-sph-c8e42be

uk-red-hv-04 Ready agent 19d v1.29.3-sph-c8e42be

uk-red-hv-11 Ready agent 19d v1.29.3-sph-c8e42be

You might get some output like above. This comes from my Homelab. The first three results are the VMs, the next five results are the ESXi hosts.

The Supervisor provides declarative API functionality and may optionally run a number of services, such as:

- vSphere Kubernetes Service (VKS)

- VM Service

- Other Supervisor services, for example:

- Harbor - an open source container registry

- Contour - an open source ingress controller

- ArgoCD - a continuous delivery (CD) tool

- Consumption Interface - a VMware service that enables tools such as VCF Automation to consume / provision resources

vSphere Kubernetes Service (VKS) #

Previously this has been called the “TKG Service”, or “TKGs”, with TKG itself standing for “Tanzu Kubernetes Grid”. TKG was also used in reference to two other Kubernetes offerings, TKGi and TKGm, at various times in the past. Now, however, we just have the vSphere Kubernetes Service, or VKS.

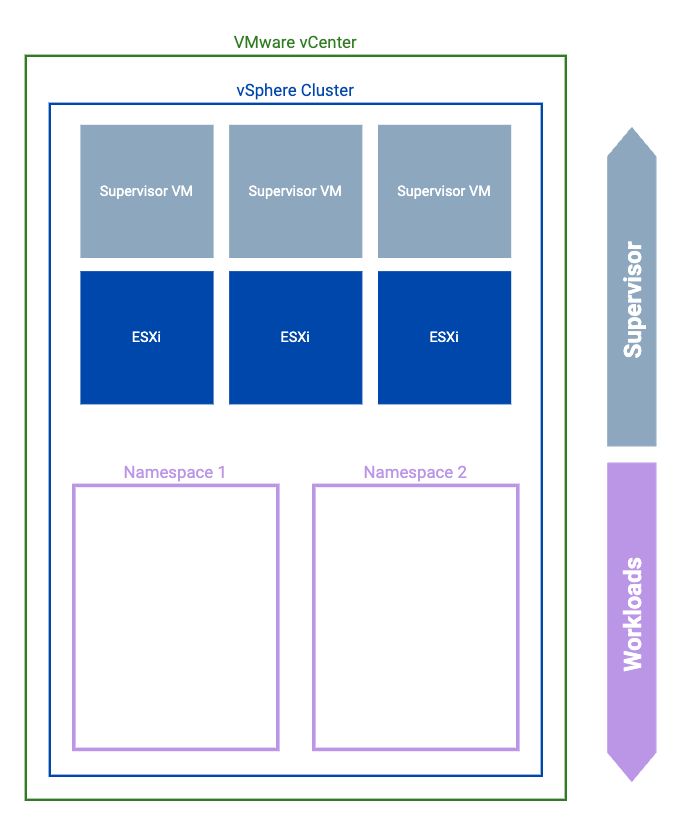

VKS is a service that runs in the Supervisor and can be used to manage and deploy Kubernetes clusters. If you have enabled this service, you can easily deploy and manage multiple Kubernetes workload clusters for all of your applications.

The Supervisor has a number of its own namespaces for its system processes and services, but you can easily create extra namespaces for other purposes. These namespaces don’t run workloads themselves, but they are a container and a configuration point for managing other Kubernetes clusters. You might choose to create namespaces for different teams, or individuals, or perhaps just to differentiate between development and production. In Figure 3 above I have simply called them “Namespace 1” and “Namespace 2”.

Into these namespaces you might create a resource representing a new Kubernetes cluster or two using a Custom Resource Definition (CRD) intended for that purpose. As an example:

apiVersion: run.tanzu.vmware.com/v1alpha3

kind: TanzuKubernetesCluster

metadata:

name: tkc-dev-01

namespace: development

spec:

topology:

controlPlane:

replicas: 3

vmClass: best-effort-medium

storageClass: uk-red-tanzu-kubernetes-gold

tkr:

reference:

name: v1.26.5---vmware.2-fips.1-tkg.1

nodePools:

- replicas: 3

name: worker

vmClass: best-effort-medium

storageClass: uk-red-tanzu-kubernetes-gold

The above would create a new cluster called “tkc-dev-01” in the Supervisor namespace called “development” that has 3 control plane nodes and 3 worker nodes.

VKS would take care of mapping the declarative configuration above to real objects and deploying and configuring the Kubernetes cluster.

With VKS it becomes quite simple to configure and deploy multiple Kubernetes clusters to host application workloads.